steveintoronto

Superstar

I don't follow the PISA scores like I used to, not least because they don't necessarily indicate all they're touted to. I must make clear, this doesn't degrade your talking points in this string one iota, in fact, it probably reinforces them, but two nations have dipped significantly from those now older figures:[...]

Note the source factsmaps.com and in turn the OECD

Canada (especially Ontario) and Finland. Finland has a national score due a national education system. Canada's is fragmented due to provincial jurisdiction for education. I'm surprised the OECD/PISA published that as a national map.

This is where the 'Cons' could have had a more meaningful point: Alberta's scores have held well, if not increased (I'll Google for reference later) and Ontario's have slipped significantly, math being a big one.

Finland has slipped due to ostensibly having a biased evaluation to begin with. Again, I'll try and reference and link later. UK similar, but the UK's situation (from memory) is down to the shocking find that private schools are now scoring lower than 'public' (in our terminology) ones for a number of reasons.

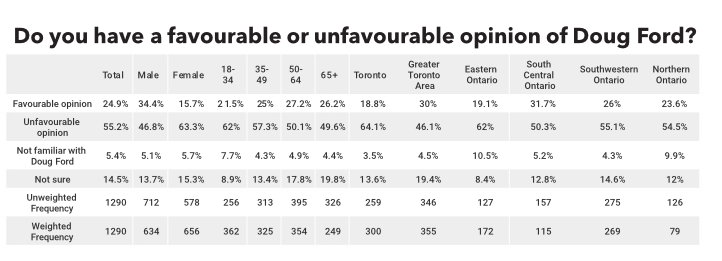

The point is this: No matter how you cut this, Ford is strangling what life there is in today's Ontario schools. Not that he'd know the difference...school's forr ths interleckskm ayleet...

Quick Google for now:

https://www.ctvnews.ca/canada/parents-call-for-math-education-reform-as-test-scores-slide-1.4117570

Not having great luck with my search tags at this time, it's an art and I'm getting a failing grade at the moment! Aha, but I can fudge my mark by trying at a more apt time later...which brings me to this, that I hinted at prior in how PISA's usual quantified unit for measurement is the jurisdiction responsible rather the the national average:

https://www.forbes.com/sites/realspin/2017/01/04/are-the-pisa-education-results-rigged/#4d9df3171561[...]

Now, if all countries were to take this approach, we would see London selected to be the sole representative of Britain, or Boston and its suburbs representing the U.S. This year, in fact, saw a separate score calculated for Massachusetts, which if taken as the nation’s results, would grab the top spot in reading with eight other nations, 2nd place in science with ten other nations, and 12th in math.

If we dig deeper into the sampling, we come across another potential problem with the PISA testing: that the sampling done on mainland China (Beijing, Jiangsu, Guangdong and Shanghai) and other cities was not taken from a wide variety of schools. Rather, the very best schools were chosen and the very best students were cherry-picked from those schools. Ong Kian Ming, a lawmaker in Malaysia recently raised this issue concerning Malaysia’s PISA results, claiming the education ministry attempted to rig the sample size in order to boost the scores. Ong added that the biased sample of schools in favor of high-performing schools can also be seen in Pisa 2015’s own data on Malaysia. Ong claims a concerted effort to take more samples from higher-performing fully residential schools: "Out of a total sample of 8,861 students, 2,661 or 30% were from fully residential schools. This is clearly an over sampling of students from fully residential schools since they comprise less than 3% of the 15-year-old cohort in 2015."

Indeed, the sampling process has some flexibility, with each country or education system submitting a sampling frame to a research firm, which contains all age-eligible students for each of its schools. An independent research firm then draws a scientific random sample of a minimum of 150 schools with two potential replacements for each original school.

Since each country or education system is responsible for recruiting the sampled schools, if one of the randomly chosen schools refuses to participate, for any reason, the country or educational system can choose from up to two neighboring schools. Replacement schools can represent up to 35% of the sampling frame. Once the schools are chosen, each country or education system submits student listing forms. On test day, student participation must be at least 80%.

Which begs some questions, such as, did some of the weaker students not take the test? Could some of the weaker schools have refused to participate? Only an independent audit of the results could prove conclusively whether the very best fruit was picked from the tree, but the sampling process does not preclude the potential for manipulation.

[...]

Does this debase @Northern Light 's point? In ways perhaps, but the real danger is the likes of Doug Ford reverse engineering (there's a phrase he can't handle) this to make it look better than what it is.

Rather like polishing a turd and putting it in a crack pipe...

Last edited: